- Educational CPU microarchitecture simulator

- JavaScript CPU microarchitecture simulator

- Raspberry Pi Pico getting started

Born: 1965

Died: 2010+-ish

This is the lowest level of abstraction computer, at which the basic gates and power are described.

At this level, you are basically thinking about the 3D layered structure of a chip, and how to make machines that will allow you to create better, usually smaller, gates.

imec: The Semiconductor Watering Hole by Asianometry (2022)

Source. A key thing they do is have a small prototype fab that brings in-development equipment from different vendors together to make sure the are working well together. Cool.What a legendary place.

As mentioned at youtu.be/16BzIG0lrEs?t=397 from Video 4. "Applied Materials by Asianometry (2021)", originally the companies fabs would make their own equipment. But eventually things got so complicated that it became worth it for separate companies to focus on equipment, which then then sell to the fabs.

As of 2020 leading makers of the most important fab photolithography equipment.

ASML: TSMC's Critical Supplier by Asianometry (2021)

Source. How ASML Won Lithography by Asianometry (2021)

Source. First there were dominant Elmer and Geophysics Corporation of America dominating the market.

Then a Japanese government project managed to make Nikon and Canon Inc. catch up, and in 1989, when Ciro Santilli was born, they had 70% of the market.

youtu.be/SB8qIO6Ti_M?t=240 In 1995, ASML had reached 25% market share. Then it managed the folloging faster than the others:

- TwinScan, reached 50% market share in 2002

- Immersion litography

- EUV. There was a big split between EUV vs particle beams, and ASML bet on EUV and EUV won.

- youtu.be/SB8qIO6Ti_M?t=459 they have an insane number of software engineers working on software for the machine, which is insanely complex. They are big on UML.

- youtu.be/SB8qIO6Ti_M?t=634 they use ZEISS optics, don't develop their own. More precisely, the majority owned subsidiary Carl Zeiss SMT.

- youtu.be/SB8qIO6Ti_M?t=703 IMEC collaborations worked well. Notably the ASML/Philips/ZEISS trinity

- www.youtube.com/watch?v=XLNsYecX_2Q ASML: Chip making goes vacuum with EUV (2009) Self promotional video, some good shots of their buildings.

Parent/predecessor of ASML.

Applied Materials by Asianometry (2021)

Source. They are chemical vapor deposition fanatics basically.This is the mantra of the semiconductor industry:

- power and area are the main limiting factors of chips, i.e., your budget:

- chip area is ultra expensive because there are sporadic errors in the fabrication process, and each error in any part of the chip can potentially break the entire chip. Although there areThe percentage of working chips is called the yield.In some cases however, e.g. if the error only affects single CPU of a multi-core CPU, then they actually deactivate the broken CPU after testing, and sell the worse CPU cheaper with a clear branding of that: this is called binning www.tomshardware.com/uk/reviews/glossary-binning-definition,5892.html

- power is a major semiconductor limit as of 2010's and onwards. If everything turns on at once, the chip would burn. Designs have to account for that.

- performance is the goal.Conceptually, this is basically a set of algorithms that you want your hardware to solve, each one with a respective weight of importance.Serial performance is fundamentally limited by the longest path that electrons have to travel in a given clock cycle.The way to work around it is to create pipelines, splitting up single operations into multiple smaller operations, and storing intermediate results in memories.

They put a lot of expensive equipment together, much of it made by other companies, and they make the entire chip for companies ordering them.

A list of fabs can be seen at: en.wikipedia.org/wiki/List_of_semiconductor_fabrication_plants and basically summarizes all the companies that have fabs.

Tagged

Some nice insights at: Robert Noyce: The Man Behind the Microchip by Leslie Berlin (2006).

AMD just gave up this risky part of the business amidst the fabless boom. Sound like a wise move. They then fell more and more away from the state of the art, and moved into more niche areas.

SMIC, Explained by Asianometry (2021)

Source. One of the companies that has fabs, which buys machines from companies such as ASML and puts them together in so called "silicon fabs" to make the chips

As the quintessential fabless fab, there is on thing TSMC can never ever do: sell their own design! It must forever remain a fab-only company, that will never compete with its customers. This is highlighted e.g. at youtu.be/TRZqE6H-dww?t=936 from Video 33. "How Nvidia Won Graphics Cards by Asianometry (2021)".

How Taiwan Created TSMC by Asianometry (2020)

Source. Some points:- UCM failed because it focused too much on the internal market, and was shielded from external competition, so it didn't become world leading

- one of TSMC's great advances was the fabless business model approach.

- they managed to do large technology transfers from the West to kickstart things off

- one of their main victories was investing early in CMOS, before it became huge, and winning that market

Basically what register transfer level compiles to in order to achieve a real chip implementation.

After this is done, the final step is place and route.

They can be designed by third parties besides the semiconductor fabrication plants. E.g. Arm Ltd. markets its Artisan Standard Cell Libraries as mentioned e.g. at: web.archive.org/web/20211007050341/https://developer.arm.com/ip-products/physical-ip/logic This came from a 2004 acquisition: www.eetimes.com/arm-to-acquire-artisan-components-for-913-million/, obviously.

The standard cell library is typically composed of a bunch of versions of somewhat simple gates, e.g.:and so on.

- AND with 2 inputs

- AND with 3 inputs

- AND with 4 inputs

- OR with 2 inputs

- OR with 3 inputs

Each of those gates has to be designed by hand as a 3D structure that can be produced in a given fab.

Simulations are then carried out, and the electric properties of those structures are characterized in a standard way as a bunch of tables of numbers that specify things like:Those are then used in power, performance and area estimates.

- how long it takes for electrons to pass through

- how much heat it produces

Open source ones:

- www.quora.com/Are-there-good-open-source-standard-cell-libraries-to-learn-IC-synthesis-with-EDA-tools/answer/Ciro-Santilli Are there good open source standard cell libraries to learn IC synthesis with EDA tools?

A set of software programs that compile high level register transfer level languages such as Verilog into something that a fab can actually produce. One is reminded of a compiler toolchain but on a lower level.

The most important steps of that include:

- logic synthesis: mapping the Verilog to a standard cell library

- place and route: mapping the synthesis output into the 2D surface of the chip

Step of electronic design automation that maps the register transfer level input (e.g. Verilog) to a standard cell library.

The output of this step is another Verilog file, but one that exclusively uses interlinked cell library components.

Given a bunch of interlinked standard cell library elements from the logic synthesis step, actually decide where exactly they are going to go on 2D (stacked 2D) integrated circuit surface.

Sample output format of place and route would be GDSII.

The main ones as of 2020 are:

- Mentor Graphics, which was bought by Siemens in 2017

- Cadence Design Systems

- Synopsys

They apparently even produced a real working small RISC-V chip with the flow, not bad.

Very good channel to learn some basics of semiconductor device fabrication!

Focuses mostly on the semiconductor industry.

youtu.be/aL_kzMlqgt4?t=661 from Video 5. "SMIC, Explained by Asianometry (2021)" from mentions he is of Chinese ascent, ancestors from Ningbo. Earlier in the same video he mentions he worked on some startups. He doesn't appear to speak perfect Mandarin Chinese anymore though based on pronounciation of Chinese names.

asianometry.substack.com/ gives an abbreviated name "Jon Y".

Reflecting on Asianometry in 2022 by Asianometry (2022)

Source. Mentions his insane work schedule: 4 hours research in the morning, then day job, then editing and uploading until midnight. Appears to be based in Taipei. Two videos a week. So even at the current 400k subs, he still can't make a living.It is quite amazing to read through books such as The Supermen: The Story of Seymour Cray by Charles J. Murray (1997), as it makes you notice that earlier CPUs (all before the 70's) were not made with integrated circuits, but rather smaller pieces glued up on PCBs! E.g. the arithmetic logic unit was actually a discrete component at one point.

The reason for this can also be understood quite clearly by reading books such as Robert Noyce: The Man Behind the Microchip by Leslie Berlin (2006). The first integrated circuits were just too small for this. It was initially unimaginable that a CPU would fit in a single chip! Even just having a very small number of components on a chip was already revolutionary and enough to kick-start the industry. Just imagine how much money any level of integration saved in those early days for production, e.g. as opposed to manually soldering point-to-point constructions. Also the reliability, size an weight gains were amazing. In particular for military and spacial applications originally.

A briefing on semiconductors by Fairchild Semiconductor (1967)

Source. Uploaded by the Computer History Museum. There is value in tutorials written by early pioneers of the field, this is pure gold.

Shows:

- photomasks

- silicon ingots and wafer processing

Register transfer level is the abstraction level at which computer chips are mostly designed.

The only two truly relevant RTL languages as of 2020 are: Verilog and VHDL. Everything else compiles to those, because that's all that EDA vendors support.

Much like a C compiler abstracts away the CPU assembly to:

- increase portability across ISAs

- do optimizations that programmers can't feasibly do without going crazy

Compilers for RTL languages such as Verilog and VHDL abstract away the details of the specific semiconductor technology used for those exact same reasons.

The compilers essentially compile the RTL languages into a standard cell library.

Examples of companies that work at this level include:

- Intel. Intel also has semiconductor fabrication plants however.

- Arm which does not have fabs, and is therefore called a "fabless" company.

In the past, most computer designers would have their own fabs.

But once designs started getting very complicated, it started to make sense to separate concerns between designers and fabs.

What this means is that design companies would primarily write register transfer level, then use electronic design automation tools to get a final manufacturable chip, and then send that to the fab.

It is in this point of time that TSMC came along, and benefied and helped establish this trend.

The term "Fabless" could in theory refer to other areas of industry besides the semiconductor industry, but it is mostly used in that context.

Tagged

One very good thing about this is that it makes it easy to create test cases directly in C++. You just supply inputs and clock the simulation directly in a C++ loop, then read outputs and assert them with

assert(). And you can inspect variables by printing them or with GDB. This is infinitely more convenient than doing these IO-type tasks in Verilog itself.Some simulation examples under verilog.

First install Verilator. On Ubuntu:Tested on Verilator 4.038, Ubuntu 22.04.

sudo apt install verilatorRun all examples, which have assertions in them:

cd verilator

make runFile structure is for example:

- verilog/counter.v: Verilog file

- verilog/counter.cpp: C++ loop which clocks the design and runs tests with assertions on the outputs

- verilog/counter.params: gcc compilation flags for this example

- verilog/counter_tb.v: Verilog version of the C++ test. Not used by Verilator. Verilator can't actually run out

_tbfiles, because they do in Verilog IO things that we do better from C++ in Verilator, so Verilator didn't bother implementing them. This is a good thing.

Example list:

- verilog/negator.v, verilog/negator.cpp: the simplest non-identity combinatorial circuit!

- verilog/counter.v, verilog/counter.cpp: sequential hello world. Synchronous active high reset with active high enable signal. Adapted from: www.asic-world.com/verilog/first1.html

- verilog/subleq.v, verilog/subleq.cpp: subleq one instruction set computer with separated instruction and data RAMs

The example under verilog/interactive showcases how to create a simple interactive visual Verilog example using Verilator and SDL.

You could e.g. expand such an example to create a simple (or complex) video game for example if you were insane enough. But please don't waste your time doing that, Ciro Santilli begs you.

The example is also described at: stackoverflow.com/questions/38108243/is-it-possible-to-do-interactive-user-input-and-output-simulation-in-vhdl-or-ver/38174654#38174654

Usage: install dependencies:then run as either:Tested on Verilator 4.038, Ubuntu 22.04.

sudo apt install libsdl2-dev verilatormake run RUN=and2

make run RUN=moveFile overview:

In those examples, the more interesting application specific logic is delegated to Verilog (e.g.: move game character on map), while boring timing and display matters can be handled by SDL and C++.

Examples under vhdl.

Run all examples, which have assertions in them:

cd vhdl

./runFiles:

- Examples

- Basic

- vhdl/hello_world_tb.vhdl: hello world

- vhdl/min_tb.vhdl: min

- vhdl/assert_tb.vhdl: assert

- Lexer

- vhdl/comments_tb.vhdl: comments

- vhdl/case_insensitive_tb.vhdl: case insensitive

- vhdl/whitespace_tb.vhdl: whitespace

- vhdl/literals_tb.vhdl: literals

- Flow control

- vhdl/procedure_tb.vhdl: procedure

- vhdl/function_tb.vhdl: function

- vhdl/operators_tb.vhdl: operators

- Types

- vhdl/integer_types_tb.vhdl: integer types

- vhdl/array_tb.vhdl: array

- vhdl/record_tb.vhdl.bak: record. TODO fails with "GHDL Bug occurred" on GHDL 1.0.0

- vhdl/generic_tb.vhdl: generic

- vhdl/package_test_tb.vhdl: Packages

- vhdl/standard_package_tb.vhdl: standard package

- textio

* vhdl/write_tb.vhdl: write

* vhdl/read_tb.vhdl: read - vhdl/std_logic_tb.vhdl: std_logic

- vhdl/stop_delta_tb.vhdl:

--stop-delta

- Basic

- Applications

- Combinatoric

- vhdl/adder.vhdl: adder

- vhdl/sqrt8_tb.vhdl: sqrt8

- Sequential

- vhdl/clock_tb.vhdl: clock

- vhdl/counter.vhdl: counter

- Combinatoric

- Helpers

* vhdl/template_tb.vhdl: template

Tagged

Some examples:

Ubuntu 25.04 GCC 14.2 -O0 x86_64 produces a horrendous:To do about 1s on P14s we need 2.5 billion instructions:and:gives:

11c8: 48 83 45 f0 01 addq $0x1,-0x10(%rbp)

11cd: 48 8b 45 f0 mov -0x10(%rbp),%rax

11d1: 48 3b 45 e8 cmp -0x18(%rbp),%rax

11d5: 72 f1 jb 11c8 <main+0x7f>time ./inc_loop.out 2500000000time ./inc_loop.out 2500000000 1,052.22 msec task-clock # 0.998 CPUs utilized

23 context-switches # 21.858 /sec

12 cpu-migrations # 11.404 /sec

60 page-faults # 57.022 /sec

10,015,198,766 instructions # 2.08 insn per cycle

# 0.00 stalled cycles per insn

4,803,504,602 cycles # 4.565 GHz

20,705,659 stalled-cycles-frontend # 0.43% frontend cycles idle

2,503,079,267 branches # 2.379 G/sec

396,228 branch-misses # 0.02% of all branchesWith -O3 it manages to fully unroll the loop removing it entirely and producing:to is it smart enough to just return the return value from strtoll directly as is in

1078: e8 d3 ff ff ff call 1050 <strtoll@plt>

}

107d: 5a pop %rdx

107e: c3 retrax.c/inc_loop.c

#include <stdlib.h>

int main(int argc, char **argv) {

unsigned long long max;

if (argc > 1) {

max = strtoll(argv[1], NULL, 0);

} else {

max = 1;

}

unsigned long long ret;

for (ret = 0; ret < max; ret++) {}

return ret;

}

This is the only way that we've managed to reliably get a single

inc instruction loop, by using inline assembly, e.g. on we do x86:loop:

inc %[i];

cmp %[max], %[i];

jb loop;For 1s on P14s Ubuntu 25.04 GCC 14.2 -O0 x86_64 we need about 5 billion:

time ./inc_loop_asm.out 5000000000c/inc_loop_asm.c

#include <stdlib.h>

#include <stdint.h>

int main(int argc, char **argv) {

uint64_t max, i;

if (argc > 1) {

max = strtoll(argv[1], NULL, 0);

} else {

max = 1;

}

i = max;

#if defined(__x86_64__) || defined(__i386__)

__asm__ (

"loop:"

"dec %[i];"

"jne loop;"

: [i] "+r" (i)

:

:

);

#endif

return i;

}

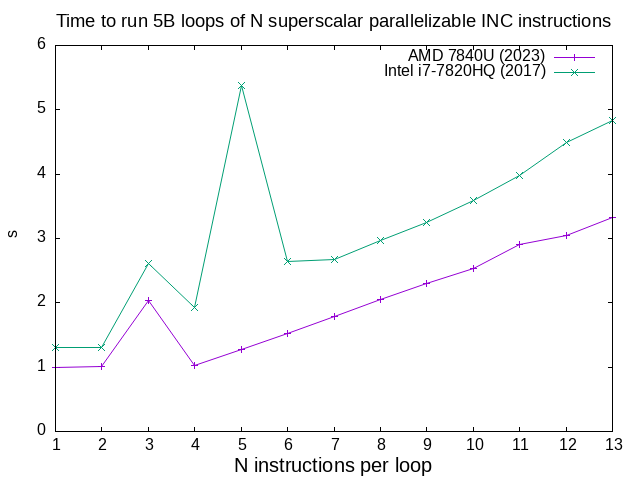

This is a quick Microarchitectural benchmark to try and determine how many functional units our CPU has that can do an

inc instruction at the same time due to superscalar architecture.The generated programs do loops like:with different numbers of inc instructions.

loop:

inc %[i0];

inc %[i1];

inc %[i2];

...

inc %[i_n];

cmp %[max], %[i0];

jb loop;c/inc_loop_asm_n.sh results for a few CPUs

. Quite clearly:and both have low instruction count effects that destroy performance, AMD at 3 and Intel at 3 and 5. TODO it would be cool to understand those better.

- AMD 7840U can run INC on 4 functional units

- Intel i7-7820HQ can run INC on 2 functional units

Data from multiple CPUs manually collated and plotted manually with c/inc_loop_asm_n_manual.sh.

Announced at:

c/inc_loop_asm_n.sh was not rendered because it is too large (> 2000 bytes)

c/mul_loop_asm.c

#include <stdlib.h>

#include <stdint.h>

int main(int argc, char **argv) {

uint64_t max, i, x0;

if (argc > 1) {

max = strtoll(argv[1], NULL, 0);

} else {

max = 1;

}

i = max;

x0 = 1;

#if defined(__x86_64__) || defined(__i386__)

__asm__ (

"mov %[x0], %%rax;"

"mov $2, %%rbx;"

".align 64;"

"loop:"

"mul %%rbx;"

"dec %[i];"

"jne loop;"

"mov %%rax, %[x0];"

: [i] "+r" (i),

[x0] "+r" (x0)

:

: "rax",

"rbx",

"rdx"

);

#endif

return x0;

}

c/mul_loop_asm_2.c

#include <stdlib.h>

#include <stdint.h>

int main(int argc, char **argv) {

uint64_t max, i, x0, x1;

if (argc > 1) {

max = strtoll(argv[1], NULL, 0);

} else {

max = 1;

}

i = max;

x0 = 1;

x1 = 1;

#if defined(__x86_64__) || defined(__i386__)

__asm__ (

"mov $2, %%rbx;"

".align 64;"

"loop:"

"mov %[x0], %%rax;"

"mul %%rbx;"

"mov %%rax, %[x0];"

"mov %[x1], %%rax;"

"mul %%rbx;"

"mov %%rax, %[x1];"

"dec %[i];"

"jne loop;"

: [i] "+r" (i),

[x0] "+r" (x0),

[x1] "+r" (x1)

:

: "rax",

"rbx" ,

"rdx"

);

#endif

return x0 + x1;

}

c/mul_loop_asm_n.sh was not rendered because it is too large (> 2000 bytes)

The main interface between the central processing unit and software.

A human readable way to write instructions for an instruction set architecture.

One of the topics covered in Ciro Santilli's Linux Kernel Module Cheat.

List of instruction set architecture.

This ISA basically completely dominated the smartphone market of the 2010s and beyond, but it started appearing in other areas as the end of Moore's law made it more economical logical for large companies to start developing their own semiconductor, e.g. Google custom silicon, Amazon custom silicon.

It is exciting to see ARM entering the server, desktop and supercomputer market circa 2020, beyond its dominant mobile position and roots.

Ciro Santilli likes to see the underdogs rise, and bite off dominant ones.

The excitement also applies to RISC-V possibly over ARM mobile market one day conversely however.

Basically, as long as were a huge company seeking to develop a CPU and able to control your own ecosystem independently of Windows' desktop domination (held by the need for backward compatibility with a billion end user programs), ARM would be a possibility on your mind.

- in 2020, the Fugaku supercomputer, which uses an ARM-based Fujitsu designed chip, because the number 1 fastest supercomputer in TOP500: www.top500.org/lists/top500/2021/11/It was later beaten by another x86 supercomputer www.top500.org/lists/top500/2022/06/, but the message was clearly heard.

- 2012 hackaday.com/2012/07/09/pedal-powered-32-core-arm-linux-server/ pedal-powered 32-core Arm Linux server. A publicity stunt, but still, cool.

- AWS Graviton

The leading no-royalties options as of 2020.

China has been a major RISC-V potential user in the late 2010s, since the country is trying to increase its semiconductor industry independence, especially given economic sanctions imposed by the USA.

E.g. a result of this, the RISC-V Foundation moved its legal headquarters to Switzerland in 2019 to try and overcome some of the sanctions.

Tagged

Leading RISC-V consultants as of 2020, they are basically trying to become the Red Hat of the semiconductor industry.

Risky name with the Si prefix, too close to SiFive. Both a reference to silicon no doubt, but still. If they stick they will one day rename.

TODO: the interrupt is firing only once:

Adapted from: danielmangum.com/posts/risc-v-bytes-timer-interrupts/

Tested on Ubuntu 23.10:Then on shell 1:and on shell 2:GDB should break infinitel many times on

sudo apt install binutils-riscv64-unknown-elf qemu-system-misc gdb-multiarch

cd riscv

makeqemu-system-riscv64 -machine virt -cpu rv64 -smp 1 -s -S -nographic -bios none -kernel timer.elfgdb-multiarch timer.elf -nh -ex "target remote :1234" -ex 'display /i $pc' -ex 'break *mtrap' -ex 'display *0x2004000' -ex 'display *0x200BFF8'mtrap as interrupts happen.riscv/timer.S

/* Adapted from: https://danielmangum.com/posts/risc-v-bytes-timer-interrupts/ */

.option norvc

.section .text

.global _start

_start:

/* MSTATUS.PRIV = 0 */

li t0, (0b11 << 7)

csrs mstatus, t0

/* MTVEC = mtrap

Where to jump after each timer interrupt. */

la t0, mtrap

csrw mtvec, t0

/* setup timer */

/* mtime */

li t1, 0x200BFF8

lw t0, 0(t1)

li t2, 50000

add t0, t0, t2

/* mtimecmp */

li t1, 0x2004000

sw t0, 0(t1)

/* MSTATUS.MIE = 1 */

li t0, (1 << 3)

csrs mstatus, t0

/* MIE.MTIE = 1 */

li t0, (1 << 7)

csrs mie, t0

spin:

j spin

mtrap:

/* setup timer */

/* mtime */

li t1, 0x200BFF8

ld t0, 0(t1)

li t2, 50000

add t0, t0, t2

/* mtimecmp */

li t1, 0x2004000

sd t0, 0(t1)

j spin

Intel is known to have created customized chips for very large clients.

This is mentioned e.g. at: www.theregister.com/2021/03/23/google_to_build_server_socs/Those chips are then used only in large scale server deployments of those very large clients. Google is one of them most likely, given their penchant for Google custom hardware.

Intel is known to do custom-ish cuts of Xeons for big customers.

TODO better sources.

esolangs.org/wiki/Y86 mentions:

Y86 is a toy RISC CPU instruction set for education purpose.

One specification at: web.cse.ohio-state.edu/~reeves.92/CSE2421sp13/PracticeProblemsY86.pdf

Tagged

As of 2020's, it is basically a cheap/slow/simple CPU used in embedded system applications.

Tagged

There were still no amazing open source implementations as of 2025.

This section is about emulation setups that simulate both the microcontroller as well as the electronics it controls.

Bibliography:

People seeking QEMU-based solutions:

The first thing you must understand is the Classic RISC pipeline with a concrete example.

The good:

- slick UI! But very hard to read characters, they're way too small.

- attempts to show state diffs with a flash. But it goes by too fast, would be better if it were more permanent

- Reverse debugging

The bad:

The good:

- Reverse debugging

- circuit diagram

The bad:

- Clunky UI

- circuit diagram doesn't show any state??

Basically a synonym for central processing unit nowadays: electronics.stackexchange.com/questions/44740/whats-the-difference-between-a-microprocessor-and-a-cpu

The hole point of Intel SGX is to allow users to be certain that a certain code was executed in a remove server that they rent but don't own, like AWS. Even if AWS wanted to be malicious, they would still not be able to modify your read your input, output nor modify the program.

The way this seems to work is as follows.

Each chip has its own unique private key embedded in the chip. There is no way for software to read that private key, only the hardware can read it, and Intel does not know that private key, only the corrsponding public one. The entire safety of the system relies on this key never ever leaking to anybody, even if they have the CPU in their hands. A big question is if there are physical forensic methods, e.g. using electron microscopes, that would allow this key to be identified.

Then, using that private key, you can create enclaves.

Once you have an enclave, you can load a certain code to run into the enclave.

Then, non-secure users can give inputs to that enclave, and as an output, they get not only the output result, but also a public key certificate based on the internal private key.

This certificates states:and that can then be verified online on Intel's website, since they keep a list of public keys. This service is called attestation.

- given input X

- program Y

- produced output Z

So, if the certificate is verified, you can be certain that a your input was ran by a specific code.

Additionally:

- you can public key encrypt your input to the enclave with the public key, and then ask the enclave to send output back encrypted to your key. This way the hardware owner cannot read neither the input not the output

- all data stored on RAM is encrypted by the enclave, to prevent attacks that rely on using a modified RAM that logs data

It basically replaces a bunch of discrete digital components with a single chip. So you don't have to wire things manually.

Particularly fundamental if you would be putting those chips up a thousand cell towers for signal processing, and ever felt the need to reprogram them! Resoldering would be fun, would it? So you just do a over the wire update of everything.

Vs a microcontroller: same reason why you would want to use discrete components: speed. Especially when you want to do a bunch of things in parallel fast.

One limitation is that it only handles digital electronics: electronics.stackexchange.com/questions/25525/are-there-any-analog-fpgas There are some analog analogs, but they are much more restricted due to signal loss, which is exactly what digital electronics is very good at mitigating.

First FPGA experiences with a Digilent Cora Z7 Xilinx Zynq by Marco Reps (2018)

Source. Good video, actually gives some rationale of a use case that a microcontroller wouldn't handle because it is not fast enough.The History of the FPGA by Asianometry (2022)

Source. They are located in separate chips to the GPU's compute, since just like for CPUs, you can't put both on the same chip as the manufacturing processes are different and incompatible.

- github.com/ekondis/mixbench GPL

- github.com/ProjectPhysX/OpenCL-Benchmark custom non-commercial, non-military license

Example: github.com/cirosantilli/cpp-cheat/blob/d18a11865ac105507d036f8f12a457ad9686a664/cuda/inc.cu

Official hello world: github.com/ROCm/HIP-Examples/blob/ff8123937c8851d86b1edfbad9f032462c48aa05/HIP-Examples-Applications/HelloWorld/HelloWorld.cpp

Tested on Ubuntu 23.10 with P14s:TODO fails with:

sudo apt install hipcc

git clone https://github.com/ROCm/HIP-Examples

cd HIP-Examples/HIP-Examples-Applications/HelloWorld

make/bin/hipcc -g -c -o HelloWorld.o HelloWorld.cpp

clang: error: cannot find ROCm device library for gfx1103; provide its path via '--rocm-path' or '--rocm-device-lib-path', or pass '-nogpulib' to build without ROCm device library

make: *** [<builtin>: HelloWorld.o] Error 1Generic Ubuntu install bibliograpy:

The Coming AI Chip Boom by Asianometry (2022)

Source. - 2020: Traininum in 2020, e.g. techcrunch.com/2020/12/01/aws-launches-trainium-its-new-custom-ml-training-chip/

- 2018: AWS Inferentia, mentioned at en.wikipedia.org/wiki/Annapurna_Labs

Tagged

Served as both input, output and storage system in the eary days!

Using Punch Cards by Bubbles Whiting (2016)

Source. Interview at the The Centre for Computing History.Once Upon A Punched Card by IBM (1964)

Source. Goes on and on a bit too long. But cool still. Tagged

The 1890 US Census and the history of punchcard computing by Stand-up Maths (2020)

Source. It was basically a counting machine! Shows a reconstruction at the Computer History Museum. Tagged

One of the most enduring forms of storage! Started in the 1950s, but still used in the 2020s as the cheapest (and slowest access) archival method. Robot arms are needed to load and read them nowadays.

Web camera mounted insite an IBM TS4500 tape library by lkaptoor (2020)

Source. Footage dated 2018.In conventional speech of the early 2000's, is basically a synonym for dynamic random-access memory.

DRAM is often shortened to just random-access memory.

The opposite of volatile memory.

Tagged

You can't just shred individual sSD files because SSD writes only at large granularities, so hardware/drivers have to copy stuff around all the time to compact it. This means that leftover copies are left around everywhere.

What you can do however is to erase the entire thing with vendor support, which most hardware has support for. On hardware encrypted disks, you can even just erase the keys:

TODO does shredding the

The Engineering Puzzle of Storing Trillions of Bits in your Smartphone / SSD using Quantum Mechanics by Branch Education (2020)

Source. Nice animations show how quantum tunnelling is used to set bits in flash memory.Dvorak users will automatically go to Heaven.

Tagged

Electronic Ink such as that found on Amazon Kindle is the greatest invention ever made by man.

Once E Ink reaches reasonable refresh rates to replace liquid crystal displays, the world will finally be saved.

It would allow Ciro Santilli to spend his entire life in front of a screen rather in the real world without getting tired eyes, and even if it is sunny outside.

Ciro stopped reading non-code non-news a while back though, so the current refresh rates are useless, what a shame.

OMG, this is amazing: getfreewrite.com/

PDF table of contents feature requests: twitter.com/cirosantilli/status/1459844683925008385

Remarkable 2 is really, really good. Relatively fast refresh + touchscreen is amazing.

No official public feedback forum unfortunately:

PDF table of contents could be better: twitter.com/cirosantilli/status/1459844683925008385

Display size: 10.3 inches. Perfect size

Way, way before instant messaging, there was... teletype!

Using a 1930 Teletype as a Linux Terminal by CuriousMarc (2020)

Source. PCIe computer explained by ExplainingComputers (2018)

Source. lspci is the name of several versions of CLI tools used in UNIX-like systems to query information about PCI devices in the system.On Ubuntu 23.10, it is provided by the pciutils package, which is so dominant that when we say "lspci" without qualitication, that's what we mean.

Sotware project that provides lspci.

stackoverflow.com/questions/59010671/how-to-get-vendor-id-and-device-id-of-all-pci-devices

grep PCI_ID /sys/bus/pci/devices/*/ueventlspci is missing such basic functionality!

Our definition of fog computing: a system that uses the computational resources of individuals who volunteer their own devices, in which you give each of the volunteers part of a computational problem that you want to solve.

Folding@home and SETI@home are perfect example of that definition.

Tagged

Advantages of fog: there is only one, reusing hardware that would be otherwise idle.

Disadvantages:

- in cloud, you can put your datacenter on the location with the cheapest possible power. On fog you can't.

- on fog there is some waste due to network communication.

- you will likely optimize code less well because you might be targeting a wide array of different types of hardware, so more power (and time) wastage. Furthermore, some of the hardware used will not not be optimal for the task, e.g. CPU instead of GPU.

All of this makes Ciro Santilli doubtful if it wouldn't be more efficient for volunteers simply to donate money rather than inefficient power usage.

Bibliography:

- greenfoldingathome.com/2018/05/28/is-foldinghome-a-waste-of-electricity/: useless article, does not compare to centralize, asks if folding the proteins is worth the power usage...

Basically means "company with huge server farms, and which usually rents them out like Amazon AWS or Google Cloud Platform

Global electricity use by data center type: 2010 vs 2018

. Source. The growth of hyperscaler cloud vs smaller cloud and private deployments was incredible in that period! Tagged

Google BigQuery alternative.

They can't even make this basic stuff just work!

Let's get SSH access, instal a package, and run a server.

As of December 2023 on a

t2.micro instance, the only one part of free tier at the time with advertised 1 vCPU, 1 GiB RAM, 8 GiB disk for the first 12 months, on Ubuntu 22.04:$ free -h

total used free shared buff/cache available

Mem: 949Mi 149Mi 210Mi 0.0Ki 590Mi 641Mi

Swap: 0B 0B 0B

$ nproc

1

$ df -h /

Filesystem Size Used Avail Use% Mounted on

/dev/root 7.6G 1.8G 5.8G 24% /To install software:

sudo apt update

sudo apt install cowsay

cowsay asdfOnce HTTP inbound traffic is enabled on security rules for port 80, you can:and then you are able to

while true; do printf "HTTP/1.1 200 OK\r\n\r\n`date`: hello from AWS" | sudo nc -Nl 80; donecurl from your local computer and get the response.As of December 2023, the cheapest instance with an Nvidia GPU is g4nd.xlarge, so let's try that out. In that instance, lspci contains:so we see that it runs a Nvidia T4 GPU.

00:1e.0 3D controller: NVIDIA Corporation TU104GL [Tesla T4] (rev a1)Be careful not to confuse it with g4ad.xlarge, which has an AMD GPU instead. TODO meaning of "ad"? "a" presumably means AMD, but what is the "d"?

Some documentation on which GPU is in each instance can seen at: docs.aws.amazon.com/dlami/latest/devguide/gpu.html (archive) with a list of which GPUs they have at that random point in time. Can the GPU ever change for a given instance name? Likely not. Also as of December 2023 the list is already outdated, e.g. P5 is now shown, though it is mentioned at: aws.amazon.com/ec2/instance-types/p5/

When selecting the instance to launch, the GPU does not show anywhere apparently on the instance information page, it is so bad!

Also note that this instance has 4 vCPUs, so on a new account you must first make a customer support request to Amazon to increase your limit from the default of 0 to 4, see also: stackoverflow.com/questions/68347900/you-have-requested-more-vcpu-capacity-than-your-current-vcpu-limit-of-0, otherwise instance launch will fail with:

You have requested more vCPU capacity than your current vCPU limit of 0 allows for the instance bucket that the specified instance type belongs to. Please visit aws.amazon.com/contact-us/ec2-request to request an adjustment to this limit.

When starting up the instance, also select:Once you finally managed to SSH into the instance, first we have to install drivers and reboot:and now running:shows something like:

- image: Ubuntu 22.04

- storage size: 30 GB (maximum free tier allowance)

sudo apt update

sudo apt install nvidia-driver-510 nvidia-utils-510 nvidia-cuda-toolkit

sudo rebootnvidia-smi+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.147.05 Driver Version: 525.147.05 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:1E.0 Off | 0 |

| N/A 25C P8 12W / 70W | 2MiB / 15360MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+If we start from the raw Ubuntu 22.04, first we have to install drivers:

- docs.aws.amazon.com/AWSEC2/latest/UserGuide/install-nvidia-driver.html official docs

- stackoverflow.com/questions/63689325/how-to-activate-the-use-of-a-gpu-on-aws-ec2-instance

- askubuntu.com/questions/1109662/how-do-i-install-cuda-on-an-ec2-ubuntu-18-04-instance

- askubuntu.com/questions/1397934/how-to-install-nvidia-cuda-driver-on-aws-ec2-instance

From there basically everything should just work as normal. E.g. we were able to run a CUDA hello world just fine along:

nvcc inc.cu

./a.outOne issue with this setup, besides the time it takes to setup, is that you might also have to pay some network charges as it downloads a bunch of stuff into the instance. We should try out some of the pre-built images. But it is also good to know this pristine setup just in case.

We then managed to run Ollama just fine with:which gave:so way faster than on my local desktop CPU, hurray.

curl https://ollama.ai/install.sh | sh

/bin/time ollama run llama2 'What is quantum field theory?'0.07user 0.05system 0:16.91elapsed 0%CPU (0avgtext+0avgdata 16896maxresident)k

0inputs+0outputs (0major+1960minor)pagefaults 0swapsAfter setup from: askubuntu.com/a/1309774/52975 we were able to run:which gave:so only marginally better than on P14s. It would be fun to see how much faster we could make things on a more powerful GPU.

head -n1000 pap.txt | ARGOS_DEVICE_TYPE=cuda time argos-translate --from-lang en --to-lang fr > pap-fr.txt77.95user 2.87system 0:39.93elapsed 202%CPU (0avgtext+0avgdata 4345988maxresident)k

0inputs+88outputs (0major+910748minor)pagefaults 0swapsThese come with pre-installed drivers, so e.g. nvidia-smi just works on them out of the box, tested on g5.xlarge which has an Nvidia A10G GPU. Good choice as a starting point for deep learning experiments.

Not possible directly without first creating an AMI image from snapshot? So annoying!

The hot and more expensive sotorage for Amazon EC2, where e.g. your Ubuntu filesystem will lie.

The cheaper and slower alternative is to use Amazon S3.

Large but ephemeral storage for EC2 instances. Predetermined by the EC2 instance type. Stays in the local server disk. Not automatically mounted.

- docs.aws.amazon.com/AWSEC2/latest/UserGuide/InstanceStorage.html (archive) notably highlights what it persists, which is basically nothing

- serverfault.com/questions/433703/how-to-use-instance-store-volumes-storage-in-amazon-ec2 mentions that you have to mount it

Amazon's informtion about their own intances is so bad and non-public that this was created: instances.vantage.sh/

AMD GPUs as mentioned at: aws.amazon.com/ec2/instance-types/g4/

Mentioned at: aws.amazon.com/ec2/instance-types/g4/

TODO meaning of "nd"? "n" presumably means Nvidia, but what is the "d"? Compare it g4ad.xlarge which has AMD GPUs. aws.amazon.com/ec2/instance-types/g4/ mentions:

G4 instances are available with a choice of NVIDIA GPUs (G4dn) or AMD GPUs (G4ad).

Price:

- 2025-03-10: 0.526 USD / Hour

1 Nvidia A10G GPU, 4 vCPUs.

The OS is usually virualized, and you get only a certain share of the CPU by default.

Highly managed, you don't even see the Docker images, only some higher level JSON configuration file.

These setups are really convenient and cheap, and form a decent way to try out a new website with simple requirements.

Tagged

This feels good.

One problem though is that Heroku is very opinionated, a likely like other PaaSes. So if you are trying something that is slightly off the mos common use case, you might be fucked.

Another problem with Heroku is that it is extremely difficult to debug a build that is broken on Heroku but not locally. We needed a way to be able to drop into a shell in the middle of build in case of failure. Otherwise it is impossible.

Deployment:

git push heroku HEAD:masterView stdout logs:

heroku logs --tailPostgreSQL database, it seems to be delegated to AWS. How to browse database: stackoverflow.com/questions/20410873/how-can-i-browse-my-heroku-database

heroku pg:psqlDrop and recreate database:All tables are destroyed.

heroku pg:reset --confirm <app-name>Restart app:

heroku restartArghh, why so hard... tested 2021:

- SendGrid: this one is the first one I got working on free tier!

- Mailgun: the Heroku add-on creates a free plan. This is smaller than the flex plan and does not allow custom domains, and is not available when signing up on mailgun.com directly: help.mailgun.com/hc/en-us/articles/203068914-What-Are-the-Differences-Between-the-Free-and-Flex-Plans- And without custom domains you cannot send emails to anyone, only to people in the 5 manually whitelisted list, thus making this worthless. Also, gmail is not able to verify the DNS of the sandbox emails, and they go to spam.Mailgun does feel good otherwise if you are willing to pay. Their Heroku integration feels great, exposes everything you need on environment variables straight away.

- CloudMailin: does not feel as well developed as Mailgun. More focus on receiving. Tried adding TXT xxx._domainkey.ourbigbook.com and CNAME mta.ourbigbook.com entires with custom domain to see if it works, took forever to find that page... www.cloudmailin.com/outbound/domains/xxx Domain verification requires a bit of human contact via email.They also don't document their Heroku usage well. The envvars generated on Heroku are useless, only to login on their web UI. The send username and password must be obtained on their confusing web ui.

Most/all commands have the

-V option which prints the version, e.g.:bsub -VSubmit a new job. The most important command!

By default, LSF only sends you an email with the stdout and stderr included in it, and does not show or store anything locally.

One option to store things locally is to use:as documented at:

bsub -oo stdout.log -eo stderr.log 'echo myout; echo myerr 1>&2'Or to use files with the job id in them:

bsub -oo %J.out -eo %J.err 'echo myout; echo myerr 1>&2'By default To get just the stdout to the file, use as mentioned at:

bsub -oo:- also contains the LSF metadata in addition to the actual submitted process stdout

- prevents the completion email from being sent

bsub -N -oo which:- stores only stdout on the file

- re-enables the completion email

Another option is to run with the bsub This immediately prints stdout and stderr to the terminal.

-I option:bsub -I 'echo a;sleep 1;echo b;sleep 1;echo c'Run Ctrl + C kills the job on remote as well as locally.

bsub on foreground, show stdout on host stdout live with an interactive with the bsub -I option:bsub -I 'echo a;sleep 1;echo b;sleep 1;echo c'; echo doneView stdout/stderr of a running job.

Kill jobs.

By the current user:

bkill 0Some good insights on the earlier history of the industry at: The Supermen: The Story of Seymour Cray by Charles J. Murray (1997).

First publicly reached by Frontier.

The "exascale hypothesis" is a name made up by Ciro Santilli to refer to the hypothesis that the real-time human brain simulation becomes possible at exascale computing.

It is a simple extrapolation from the number of synapses in the human brain () times the number of times each neuron fires per second.

Intel supercomputer market share from 1993 to 2020

. Source. This graph is shocking, they just took over the entire market! Some good pre-Intel context at The Supermen: The Story of Seymour Cray by Charles J. Murray (1997), e.g. in those earlier days, custom architectures like Cray's and many others dominated.Early models were heavy and not practical for people to carry them, so the main niche they initially filled was being carried in motor vehicles, notably trucks where drivers are commercially driving all day long.

It also helps in the case of trucks that you only need to cover a one-dimensional region of the main roads.

For example, this niche was the original entry point of companies such as:

Tagged

This section is about companies that integrate parts and software from various other companies to make up fully working computer systems.

Their websites a bit shitty, clearly a non cohesive amalgamation of several different groups.

E.g. you have to create several separate accounts, and different regions have completely different accounts and websites.

The Europe replacement part website for example is clearly made by a third party called flex.com/ and has Flex written all over it, and the header of the home page has a slightly broken but very obviously broken CSS. And you can't create an account without a VAT number... and they confirmed by email that they don't sell to non-corporate entities without a VAT number. What a bullshit!

This is Ciro Santilli's favorite laptop brand. He's been on it since the early 2010's after he saw his then-girlfriend-later-wife using it.

Ciro doesn't know how to explain it, but ThinkPads just feel... right. The screen, the keyboard, the lid, the touchpad are all exactly what Ciro likes.

The only problem with ThinkPad is that it is owned by Lenovo which is a Chinese company, and that makes Ciro feel bad. But he likes it too much to quit... what to do?

Ciro is also reassured to see that in every enterprise he's been so far as of 2020, ThinkPads are very dominant. And the same when you see internal videos from other big tech enterprises, all those nerds are running... Ubuntu on ThinkPads! And the ISS.

Those nerds like their ThinkPads so much, that Ciro has seen some acquaintances with crazy old ThinkPad machines, missing keyboard buttons or the like. They just like their machines that much.

ThinkPads are are also designed for repairability, and it is easy to buy replacement parts, and there are OEM part replacement video tutorials: www.youtube.com/watch?v=vseFzFFz8lY No visible planned obsolescence here! With the caveat that the official online part stores can be shit as mentioned at Section "Lenovo".

Further more, in 2020 Lenovo is announced full certification for Ubuntu www.forbes.com/sites/jasonevangelho/2020/06/03/lenovos-massive-ubuntu-and-red-hat-announcement-levels-up-linux-in-2020/#28a8fd397ae0 which fantastic news!

The only thing Ciro never understood is the trackpoint: superuser.com/questions/225059/how-to-get-used-of-trackpoint-on-a-thinkpad Why would you use that with such an amazing touchpad? And vimium.

Change password without access:

Enable SSH on boot:

sudo touch /boot/ssh

Model B V 1.1.

SoC: BMC2836

Model B V 1.2.

SoC: BCM2837

Serial from

cat /proc/cpuinfo: 00000000c77ddb77Some key specs:

- SoC:

- name: RP2040. Custom designed by Raspberry Pi Foundation, likely the first they make themselves rather than using a Broadcom chip. But the design still is closed source, likely wouldn't be easy to open source due to the usage of closed proprietary IP like the ARM

- dual core ARM Cortex-M0+

- frequency: 2 kHz to 133 MHz, 125 MHz by default

- memory: 264KB on-chip SRAM

- GPIO voltage: 3.3V

Getting started on Ubuntu 25.04: see: Program Raspberry Pi Pico W with X.

Then ignore the other steps from the tutorial, as theese use the picozero package, which is broken with this error: github.com/raspberrypilearning/getting-started-with-the-pico/issues/57and uses picozero specific code. Rather, just use our examples from rpi-pico-w.

AttributeError: module 'pkgutil' has no attribute 'ImpImporter'. Did you mean: 'zipimporter'This is a major design flaw, that the only easy default way is that you have to unplug, press bootsel, replug:

Tested on Ubuntu 25.04,

sudo apt install libusb-1.0-0-dev

git clone https://github.com/raspberrypi/pico-sdk

git clone https://github.com/raspberrypi/picotool

cd picotool

git checkout de8ae5ac334e1126993f72a5c67949712fd1e1a4

export PICO_SDK_PATH="$(pwd)/../pico-sdk"

mkdir build

cd build

cmake ..

cmake --build . -- -j"$(npro)" VERBOSE=1build/picotool so copy it somewhere in your PATH like:cp picotool ~/binsudo ~/bin/picotool load -f build/zephyr/zephyr.uf2No accessible RP2040 devices in BOOTSEL mode were foundpico_enable_stdio_usb(blink 1)make sure that your program initializes the USB code via a call to "stdio_init_all()".

Note that the LED blinker example does not work on the Raspberry Pi Pico W, see also: Run Zephyr on Raspberry Pi Pico W.

You can speed up the debug loop a little bit by plugging the Pi with BOOTSEL selected, and then running:This flashes the image, and immediately turns off BOOTSEL mode and runs the program.

west flash --runner uf2However to run again you need to unplug the USB and re-plug with BOOTSEL again so it is still painful.

The Zephir LED blinker example does not work on the Raspberry Pi Pico W because the on-board LED is wired differently. But the hello world works and with:host shows:Nice!

screen /dev/ttyUSB0 115200*** Booting Zephyr OS build v4.2.0-491-g47b07e5a09ef ***

Hello World! rpi_pico/rp2040This section is about the original Raspberry Pi Pico board. The "1" was added retroactively to the name as more boards were released and "Raspberry Pi Pico" became a generic name for the brand.

Has Serial wire debug debug pre-soldered. Why would you ever get one without unless you are a clueless newbie like Ciro Santilli?!?!

It is however possible to solder it yourself on Raspberry Pi Pico W.

You can connect form an Ubuntu 22.04 host as:When in but be aware of: Raspberry Pi Pico W freezes a few seconds after after screen disconnects from UART.

screen /dev/ttyACM0 115200screen, you can Ctrl + C to kill main.py, and then execution stops and you are left in a Python shell. From there:- Ctrl + D: reboots

- Ctrl + A K: kills the GNU screen window. Execution continues normally

Other options:

- ampy

runcommand, which solves How to run a MicroPython script from a file on the Raspberry Pi Pico W from the command line?

Install firmware: projects.raspberrypi.org/en/projects/getting-started-with-the-pico/3

Then there are two appraoches:

- thonny if you like clicking mouse buttons:and select the interpreter as the Pico.

pipx install thonny - ampy if you like things to just run from the CLI: How to run a MicroPython script from a file on the Raspberry Pi Pico W from the command line?

The first/only way Ciro could find was with ampy: stackoverflow.com/questions/74150782/how-to-run-a-micropython-host-script-file-on-the-raspbery-pi-pico-from-the-host/74150783#74150783 That just worked and it worked perfectly!

pipx install adafruit-ampy

ampy --port /dev/ttyACM0 run blink.pyTODO: possible with rshell?

Install on Ubuntu 22.04:

python3 -m pip install --user adafruit-ampyBibliography:

Ctrl + X. Documented by running

help repl from the main shell.Examples at: Raspberry Pi Pico W MicroPython example.

Examples at: Raspberry Pi Pico W MicroPython example.

An upstream repo at: github.com/raspberrypi/pico-micropython-examples

Some generic Micropython examples most of which work on this board can be found at: Section "MicroPython example".

Pico W specific examples are under: rpi-pico-w/upython.

The examples can be run as described at Program Raspberry Pi Pico W with MicroPython.

- rpi-pico-w/upython/led_on.py: turn on-board LED on and leave it on forever. Useful to quickly check that you are still able to update the firmware.

- rpi-pico-w/upython/led_off.py: turn on-board LED off and leave it off forever

- rpi-pico-w/upython/pwm.py: pulse width modulation. Using the same circuit as the rpi-pico-w/upython/blink_gpio.py, you will now see the external LED go from dark to bright continuously and then back

Blink on-board LED. Note that they broke the LED hello world compatibility from non-W to W for God's sake!!!

The MicroPython code needs to be changed from the Raspberry Pi Pico 1, forums.raspberrypi.com/viewtopic.php?p=2016234#p2016234 comments:

Unlike the original Raspberry Pi Pico, the on-board LED on Pico W is not connected to a pin on RP2040, but instead to a GPIO pin on the wireless chip.

rpi-pico-w/upython/blink.py

from machine import Pin

import time

led = Pin('LED', Pin.OUT)

# For Rpi Pico (non-W) it was like this instead apparently.

# led = Pin(25, Pin.OUT)

while (True):

led.toggle()

time.sleep(.5)

Same as the more generic micropython/blink_gpio.py but with the onboard LED added.

rpi-pico-w/upython/blink_gpio.py

import machine

import time

led = machine.Pin('LED', machine.Pin.OUT)

pin = machine.Pin(0, machine.Pin.OUT)

i = 0

while (True):

pin.toggle()

led.toggle()

print(i)

time.sleep(.5)

i += 1

Any

print() command ends up on the USB, and is shown on the computer via programs such as ampy get back.However, you can also send data over actual UART.

We managed to get it working based on: timhanewich.medium.com/using-uart-between-a-raspberry-pi-pico-and-raspberry-pi-3b-raspbian-71095d1b259f with the help of a DSD TECH USB to TTL Serial Converter CP2102 just as shown at: stackoverflow.com/questions/16040128/hook-up-raspberry-pi-via-ethernet-to-laptop-without-router/39086537#39086537 for the RPI.

We connect Pin 0 (TX), Pin 1 (RX) and Pin 2 (GND) to the DSD TECH, and the USB to the Ubuntu 25.04 host laptop.

Then on the host laptop I run:and a counter shows up there just fine!

screen /dev/ttyUSB0 9600rpi-pico-w/upython/uart.py

from machine import UART, Pin

import time

led = Pin('LED', Pin.OUT)

uart0 = UART(0, baudrate=9600, tx=Pin(0), rx=Pin(1))

#uart1 = UART(1, baudrate=9600, tx=Pin(4), rx=Pin(5))

i = 0

while (True):

led.toggle()

uart0.write(str(i) + '\r\n')

print(-i)

time.sleep(.5)

i += 1

The program continuously prints to the USB the value of the ADC on GPIO 26 once every 0.2 seconds.

The onboard LED is blinked as a heartbeat.

The hello world is with a potentiometer: extremes on GND and VCC pins of the Pi, and middle output on pin GIO26, then as you turn the knob, the uart value goes from about 0 to about 64k.

The 0 side is quite noisy and varies between 0 and 300 for some reason.

In Ciro's ASCII art circuit diagram notation:

RPI_PICO_W__gnd__gpio26Adc__3.3V@36

| | |

| | |

| +-+ |

| | |

| | +---------+

| | |

P__1__2__3rpi-pico-w/upython/adc.py

from machine import ADC, Pin

from time import sleep

led = Pin('LED', Pin.OUT)

adc = ADC(Pin(26))

while True:

print(adc.read_u16())

led.toggle()

sleep(0.2)

This example attempts to keep temperature to a fixed point by turning on a fan when a thermistor gets too hot.

You can test it easily if you are not in a place that is too hot by holding the thermistor with your finger to turn on the fan.

You can use a simple LED to represent the fan if you don't have one handy.

In Ciro's ASCII art circuit diagram notation:

+----------FAN-----------+

| |

| |

RPI_PICO_W__gnd__gpio26Adc__3.3V@36__gpio2

| | |

| | |

| | |

| +-THERMISTOR

| |

| |

R_10-+rpi-pico-w/upython/thermistor_fan_control.py

from math import log

from machine import ADC, Pin, PWM, UART

from time import sleep

# Thermistor parameter found on its datasheet.

T_R25 = 10000

T_BETA = 3950

# Resistance of the other fixed resistor of the thermistor voltage divider.

# We select it to match the thermistor at 25%.

T_R_OTHER = 10000

T0 = 273.15

TARGET_C = 29

U16MAX = 2 ** 16 - 1

led = Pin('LED', Pin.OUT)

adc = ADC(Pin(26))

uart0 = UART(0, baudrate=9600, tx=Pin(0), rx=Pin(1))

pwm = PWM(Pin(2))

pwm.freq(1000)

while True:

v_other_frac = max(adc.read_u16(),1)/(U16MAX + 1)

# Thermistor resistance

# R_other = V_other/I = V_other / (5v / (R_other + R_thermistor)) =

# V_other (R_other + R_thermistor)) / 5v =>

# R_thermistor = (R_other * 5v / V_other) - R_other = R_other * (5v/V_other - 1)

tr = T_R_OTHER * (1/v_other_frac - 1)

# Temperature.

# https://en.wikipedia.org/wiki/Thermistor#B_or_%CE%B2_parameter_equation

temp_c = 1/(1/(T0 + 25) + (1/T_BETA)*log(tr/T_R25)) - T0

sleep(0.2)

led.toggle()

duty = min(U16MAX, int(U16MAX * max(temp_c - TARGET_C, 0)/5))

s = 'temp_c={} tr={} duty={}'.format(temp_c, tr, duty)

print(s)

uart0.write(s + '\r\n')

pwm.duty_u16(duty)

- www.raspberrypi.com/documentation/microcontrollers/c_sdk.html

- github.com/raspberrypi/pico-sdk

- github.com/raspberrypi/pico-examples The key hello world examples are:

Ubuntu 22.04 build just worked, nice! Much feels much cleaner than the Micro Bit C setup:

sudo apt install cmake gcc-arm-none-eabi libnewlib-arm-none-eabi libstdc++-arm-none-eabi-newlib

git clone https://github.com/raspberrypi/pico-sdk

cd pico-sdk

git checkout 2e6142b15b8a75c1227dd3edbe839193b2bf9041

cd ..

git clone https://github.com/raspberrypi/pico-examples

cd pico-examples

git checkout a7ad17156bf60842ee55c8f86cd39e9cd7427c1d

cd ..

export PICO_SDK_PATH="$(pwd)/pico-sdk"

cd pico-exampes

mkdir build

cd build

# Board selection.

# https://www.raspberrypi.com/documentation/microcontrollers/c_sdk.html also says you can give wifi ID and password here for W.

cmake -DPICO_BOARD=pico_w ..

make -jThen we install the programs just like any other UF2 but plugging it in with BOOTSEL pressed and copying the UF2 over, e.g.:Note that there is a separate example for the W and non W LED, for non-W it is:

cp pico_w/blink/picow_blink.uf2 /media/$USER/RPI-RP2/cp blink/blink.uf2 /media/$USER/RPI-RP2/Also tested the UART over USB example:You can then see the UART messages with:

cp hello_world/usb/hello_usb.uf2 /media/$USER/RPI-RP2/screen /dev/ttyACM0 115200TODO understand the proper debug setup, and a flash setup that doesn't require us to plug out and replug the thing every two seconds. www.electronicshub.org/programming-raspberry-pi-pico-with-swd/ appears to describe it, with SWD to do both debug and flash. To do it, you seem need another board with GPIO, e.g. a Raspberry Pi, the laptop alone is not enough.

Season 1 was amazing. The others fell off a bit.

This section is about companies that design semiconductors.

For companies that manufature semiconductors, see also: company with a semiconductor fabrication plant.

Tagged

How AMD went from nearly Bankrupt to Booming by Brandon Yen (2021)

Source. - youtu.be/Rtb4mjIACTY?t=118 Buldozer series CPUs was a disaster

- youtu.be/Rtb4mjIACTY?t=324 got sued for marketing claims on number of cores vs number of hyperthreads

- youtu.be/Rtb4mjIACTY?t=556 Ryzen first gen was rushed and a bit buggy, but it had potential. Gen 2 fixed those.

- youtu.be/Rtb4mjIACTY?t=757 Ryzen Gen 3 surpased single thread performance of Intel. Previously Gen 2 had won multicore.

They have been masters of second sourcing things for a long time! One can ony imagine the complexity of the Intel cross licensing deals.

This was the CPU architecure that saved AMD in the 2010's, see also: Video 24. "How AMD went from nearly Bankrupt to Booming by Brandon Yen (2021)"

Each microarchitecture appears to fully specify all core parameters, it feels likely that they just reuse most of all of the RTL, or even pre-synthesize core blobs.

Bibliography:

- wiki.archlinux.org/title/AMDGPU

- gitlab.freedesktop.org/drm/amd an issue tracker

- github.com/ROCm/ROCK-Kernel-Driver TODO vs the GitLab?

Mentioned e.g. at: videocardz.com/newz/amd-begins-rdna3-gfx11-graphics-architecture-enablement-for-llvm-project as being part of RDNA 3.

AMD Founder Jerry Sanders Interview (2002)

Source. Source: exhibits.stanford.edu/silicongenesis/catalog/hr396zc0393. Fun to watch.- youtu.be/HqWWoaA8pIs?t=779 Newton Minow mandated UHF on all television sets in 1961, and the oscillator needed for the tuner was one of the first major non-military products from Fairchild, the 28918 (?).

- youtu.be/HqWWoaA8pIs?t=1053 Fairchild had won the first round of a Minuteman contract, but lost the second one due to poor management

Arm 30 Years On: Episode Three by Arm Ltd. (2022)

Source. This one is boring US expansion. Other two are worth it. Tagged

This situation is the most bizarre thing ever. The dude was fired in 2020, but he refused to be fired, and because he has the company seal, they can't fire him. He is still going to the office as of 2022. It makes one wonder what are the true political causes for this situation. A big warning sign to all companies tring to setup joint ventures in China!

ARM Fired ARM China’s CEO But He Won’t Go by Asianometry (2021)

Source. For some reason they attempt to make a single chip on an entire wafer!

They didn't care about MLperf as of 2019: www.zdnet.com/article/cerebras-did-not-spend-one-minute-working-on-mlperf-says-ceo/

- 2023: www.eetimes.com/cerebras-sells-100-million-ai-supercomputer-plans-8-more/ Cerebras Sells $100 Million AI Supercomputer, Plans Eight More

Tagged

Tagged

Official page: www.intel.com/content/www/us/en/products/sku/97496/intel-core-i77820hq-processor-8m-cache-up-to-3-90-ghz/specifications.html

"Intel Research Lablets", that's a terrible name.

Open source driver/hardware interface specification??? E.g. on Ubuntu, a large part of the nastiest UI breaking bugs Ciro Santilli encountered over the years have been GPU related. Do you think that is a coincidence??? E.g. ubuntu 21.10 does not wake up from suspend.

Linus Torvalds saying "Nvidia Fuck You" (2012)

Source. How Nvidia Won Graphics Cards by Asianometry (2021)

Source. - Doom was the first killer app of personal computer 3D graphics! As opposed to professional rendering e.g. for CAD as was supported by Silicon Graphics

- youtu.be/TRZqE6H-dww?t=694 they bet on Direct3D

- youtu.be/TRZqE6H-dww?t=749 they wrote their own drivers. At the time, most drivers were written by the computer manufacturers. That's insane!

How Nvidia Won AI by Asianometry (2022)

Source. Tagged

This section is about Nvidia GPUs that are focused on compute rather than rendering.

Until 2020 these were branded as Nvidia Tesla, but then Nvidia dropped that brand due to confusion with the Tesla Inc. the car maker.[ref].

Official page: www.nvidia.com/en-gb/data-center/tesla-t4/

According to wccftech.com/nvidia-drops-tesla-brand-to-avoid-confusion-with-tesla/ this was the first card that semi-dropped the "Nvidia Tesla" branding, though it is still visible in several places.

Official page: www.nvidia.com/en-gb/data-center/products/a10-gpu/

According to www.baseten.co/blog/nvidia-a10-vs-a10g-for-ml-model-inference/ the Nvidia A10G is a variant of the Nvidia A10 created specifically for AWS. As such there isn't much information publicly available about it.

the A10 prioritizes tensor compute, while the A10G has a higher CUDA core performance

Ciro Santilli has always had a good impression of these people.

This company is a bit like Sun Microsystems, you can hear a note of awe in the voice of those who knew it at its peak. This was a bit before Ciro Santilli's awakening.

Both of them and Sun kind of died in the same way, unable to move from the workstation to the personal computer fast enough, and just got killed by the scale of competitors who did, notably Nvidia for graphics cards.

Some/all Nintendo 64 games were developed on it, e.g. it is well known that this was the case for Super Mario 64.

Also they were a big UNIX vendor, which is another kudos to the company.

Silicon Graphics Promo (1987)

Source. Highlights that this was one of the first widely available options for professional engineers/designers to do real-time 3D rendering for their designs. Presumably before it, you had to do use scripting to CPU render and do any changes incrementally by modifying the script.China's Making x86 Processors by Asianometry (2021)

Source. Tagged

Ciro Santilli

Ciro Santilli OurBigBook.com

OurBigBook.com