The main reason Ciro Santilli never touched it is that it feels that every public data set has already been fully mined or has already had the most interesting algorithms developed for it, so you can't do much outside of big companies.

This is why Ciro started Ciro's 2D reinforcement learning games to generate synthetic data and thus reduce the cost of data.

The other reason is that it is ugly.

- Theoretical peak performance of GPT inference

- llama-cli inference batching

- Robot AI

- Toronto faces dataset

- 2023-12: New York Times vs OpenAI: www.wsj.com/tech/ai/new-york-times-sues-microsoft-and-openai-alleging-copyright-infringement-fd85e1c4

- 2023-02: Getty Images vs Stable Diffusion: www.theverge.com/2023/2/6/23587393/ai-art-copyright-lawsuit-getty-images-stable-diffusion

Given a bunch of points in dimensions, PCA maps those points to a new dimensional space with .

is a hyperparameter, and are common choices when doing dataset exploration, as they can be easily visualized on a planar plot.

The mapping is done by projecting all points to a dimensional hyperplane. PCA is an algorithm for choosing this hyperplane and the coordinate system within this hyperplane.

The hyperplane choice is done as follows:

- the hyperplane will have origin at the mean point

- the first axis is picked along the direction of greatest variance, i.e. where points are the most spread out.Intuitively, if we pick an axis of small variation, that would be bad, because all the points are very close to one another on that axis, so it doesn't contain as much information that helps us differentiate the points.

- then we pick a second axis, orthogonal to the first one, and on the direction of second largest variance

- and so on until orthogonal axes are taken

www.sartorius.com/en/knowledge/science-snippets/what-is-principal-component-analysis-pca-and-how-it-is-used-507186 provides an OK-ish example with a concrete context. In there, each point is a country, and the input data is the consumption of different kinds of foods per year, e.g.:so in this example, we would have input points in 4D.

- flour

- dry codfish

- olive oil

- sausage

The question is then: we want to be able to identify the country by what they eat.

Suppose that every country consumes the same amount of flour every year. Then, that number doesn't tell us much about which country each point represents (has the least variance), and the first PCA axes would basically never point anywhere near that direction.

Another cool thing is that PCA seems to automatically account for linear dependencies in the data, so it skips selecting highly correlated axes multiple times. For example, suppose that dry codfish and olive oil consumption are very high in Portugal and Spain, but very low in Germany and Poland. Therefore, the variation is very high in those two parameters, and contains a lot of information.

However, suppose that dry codfish consumption is also directly proportional to olive oil consumption. Because of this, it would be kind of wasteful if we selected:since the information about codfish already tells us the olive oil. PCA apparently recognizes this, and instead picks the first axis at a 45 degree angle to both dry codfish and olive oil, and then moves on to something else for the second axis.

- dry codfish as the first axis

- olive oil as the second axis

We can see that much like the rest of machine learning, PCA can be seen as a form of compression.

A parameter that you choose which determines how the algorithm will perform.

In the case of machine learning in particular, it is not part of the training data set.

Hyperparameters can also be considered in domains outside of machine learning however, e.g. the step size in partial differential equation solver is entirely independent from the problem itself and could be considered a hyperparamter. One difference from machine learning however is that step size hyperparameters in numerical analysis are clearly better if smaller at a higher computational cost. In machine learning however, there is often an optimum somewhere, beyond which overfitting becomes excessive.

Philosophically, machine learning can be seen as a form of lossy compression.

And if we make it too lossless, then we are basically overfitting.

Bibliography:

An impossible AI-complete dream!

It is impossible to understand speech, and take meaningful actions from it, if you don't understand what is being talked about.

And without doubt, "understanding what is being talked about" comes down to understanding (efficiently representing) the geometry of the 3D world with a time component.

Not from hearing sounds alone.

- analyticsindiamag.com/5-open-source-recommender-systems-you-should-try-for-your-next-project/ 5 Open-Source Recommender Systems You Should Try For Your Next Project (2019)

One of the most simply classification algorithm one can think of: just see whatever kind of point your new point seems to be closer to, and say it is also of that type! Then it is just a question of defining "close".

Scikit-learn implementation scikit-learn.org/stable/auto_examples/neighbors/plot_classification.html at python/sklearn/knn.py

This is the first thing you have to know about supervised learning:Both of those already have hardware acceleration available as of the 2010s.

- training is when you learn model parameters from input. This literally means learning the best value we can for a bunch of number input numbers of the model. This can easily be on the hundreds of thousands.

- inference is when we take a trained model (i.e. with the parameters determined), and apply it to new inputs

An IBM made/pushed term, but that matches Ciro Santilli's general view of how we should move forward AGI.

Ciro's motivation/push for this can be seen e.g. at: Ciro's 2D reinforcement learning games.

Interesting layer skip architecture thing.

Apparently destroyed ImageNet 2015 and became very very famous as such.

- torchvision ResNet

- MLperf v2.1 ResNet contains a pre-trained ResNet ONNX at zenodo.org/record/4735647/files/resnet50_v1.onnx for its inference benchmark. We've tested it at: Run MLperf v2.1 ResNet on Imagenette.

catalog.ngc.nvidia.com/orgs/nvidia/resources/resnet_50_v1_5_for_pytorch explains:

The difference between v1 and v1.5 is that, in the bottleneck blocks which requires downsampling, v1 has stride = 2 in the first 1x1 convolution, whereas v1.5 has stride = 2 in the 3x3 convolution.This difference makes ResNet50 v1.5 slightly more accurate (~0.5% top1) than v1, but comes with a small performance drawback (~5% imgs/sec).

CNN convolution kernels are not hardcoded. They are learnt and optimized via backpropagation. You just specify their size! Example in PyTorch you'd do just:as used for example at: activatedgeek/LeNet-5.

nn.Conv2d(1, 6, kernel_size=(5, 5))This can also be inferred from: stackoverflow.com/questions/55594969/how-to-visualise-filters-in-a-cnn-with-pytorch where we see that the kernels are not perfectly regular as you'd expected from something hand coded.

It trains the LeNet-5 neural network on the MNIST dataset from scratch, and afterwards you can give it newly hand-written digits 0 to 9 and it will hopefully recognize the digit for you.

Ciro Santilli created a small fork of this repo at lenet adding better automation for:

- extracting MNIST images as PNG

- ONNX CLI inference taking any image files as input

- a Python

tkinterGUI that lets you draw and see inference live - running on GPU

Install on Ubuntu 24.10 with:We use our own

sudo apt install protobuf-compiler

git clone https://github.com/activatedgeek/LeNet-5

cd LeNet-5

git checkout 95b55a838f9d90536fd3b303cede12cf8b5da47f

virtualenv -p python3 .venv

. .venv/bin/activate

pip install \

Pillow==6.2.0 \

numpy==1.24.2 \

onnx==1.13.1 \

torch==2.0.0 \

torchvision==0.15.1 \

visdom==0.2.4 \

;pip install because their requirements.txt uses >= instead of == making it random if things will work or not.On Ubuntu 22.10 it was instead:

pip install

Pillow==6.2.0 \

numpy==1.26.4 \

onnx==1.17.0 torch==2.6.0 \

torchvision==0.21.0 \

visdom==0.2.4 \

;Then run with:This script:It throws a billion exceptions because we didn't start the Visdom server, but everything works nevertheless, we just don't get a visualization of the training.

python run.py- does a fixed 15 epochs on the training data

- it then uses the trained net from memory to check accuracy with the test data

- then it also produces a

lenet.onnxONNX file which contains the trained network, nice!

The terminal outputs lines such as:

Train - Epoch 1, Batch: 0, Loss: 2.311587

Train - Epoch 1, Batch: 10, Loss: 2.067062

Train - Epoch 1, Batch: 20, Loss: 0.959845

...

Train - Epoch 1, Batch: 230, Loss: 0.071796

Test Avg. Loss: 0.000112, Accuracy: 0.967500

...

Train - Epoch 15, Batch: 230, Loss: 0.010040

Test Avg. Loss: 0.000038, Accuracy: 0.989300One of the benefits of the ONNX output is that we can nicely visualize the neural network on Netron:

output = self.c1(img)

x = self.c2_1(output)

output = self.c2_2(output)

output += x

output = self.c3(output)Number 9 drawn with mouse on GIMP by Ciro Santilli (2023)

Note that:

- the images must be drawn with white on black. If you use black on white, it the accuracy becomes terrible. This is a good very example of brittleness in AI systems!

- images must be converted to 32x32 for

lenet.onnx, as that is what training was done on. The training step converted the 28x28 images to 32x32 as the first thing it does before training even starts

We can try the code adapted from thenewstack.io/tutorial-using-a-pre-trained-onnx-model-for-inferencing/ at lenet/infer.py:and it works pretty well! The program outputs:as desired.

cd lenet

cp ~/git/LeNet-5/lenet.onnx .

wget -O 9.png https://raw.githubusercontent.com/cirosantilli/media/master/Digit_9_hand_drawn_by_Ciro_Santilli_on_GIMP_with_mouse_white_on_black.png

./infer.py 9.png9We can also try with images directly from Extract MNIST images.and the accuracy is great as expected.

infer_mnist.py lenet.onnx mnist_png/out/testing/1/*.pngBy default, the setup runs on CPU only, not GPU, as could be seen by running htop. But by the magic of PyTorch, modifying the program to run on the GPU is trivial:and leads to a faster runtime, with less

cat << EOF | patch

diff --git a/run.py b/run.py

index 104d363..20072d1 100644

--- a/run.py

+++ b/run.py

@@ -24,7 +24,8 @@ data_test = MNIST('./data/mnist',

data_train_loader = DataLoader(data_train, batch_size=256, shuffle=True, num_workers=8)

data_test_loader = DataLoader(data_test, batch_size=1024, num_workers=8)

-net = LeNet5()

+device = 'cuda'

+net = LeNet5().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=2e-3)

@@ -43,6 +44,8 @@ def train(epoch):

net.train()

loss_list, batch_list = [], []

for i, (images, labels) in enumerate(data_train_loader):

+ labels = labels.to(device)

+ images = images.to(device)

optimizer.zero_grad()

output = net(images)

@@ -71,6 +74,8 @@ def test():

total_correct = 0

avg_loss = 0.0

for i, (images, labels) in enumerate(data_test_loader):

+ labels = labels.to(device)

+ images = images.to(device)

output = net(images)

avg_loss += criterion(output, labels).sum()

pred = output.detach().max(1)[1]

@@ -84,7 +89,7 @@ def train_and_test(epoch):

train(epoch)

test()

- dummy_input = torch.randn(1, 1, 32, 32, requires_grad=True)

+ dummy_input = torch.randn(1, 1, 32, 32, requires_grad=True).to(device)

torch.onnx.export(net, dummy_input, "lenet.onnx")

onnx_model = onnx.load("lenet.onnx")

EOFuser as now we are spending more time on the GPU than CPU:real 1m27.829s

user 4m37.266s

sys 0m27.562sThis is a small fork of activatedgeek/LeNet-5 by Ciro Santilli adding better integration and automation for:

- extracting MNIST images as PNG

- ONNX CLI inference taking any image files as input

- a Python

tkinterGUI that lets you draw and see inference live - running on GPU

Install on Ubuntu 24.10:

sudo apt install protobuf-compiler

cd lenet

virtualenv -p python3 .venv

. .venv/bin/activate

pip install -r requirements-python-3-12.txtDownload and extract MNIST train, test accuracy, and generate the ONNX Extract MNIST images as PNG:Infer some individual images using the ONNX:Draw on a GUI and see live inference using the ONNX:TODO: the following are missing for this to work:

lenet.onnx:./train.py./extract_pngs.py./infer.py data/MNIST/png/test/0/*.png./draw.py- start a background task. This we know how to do: stackoverflow.com/questions/1198262/tkinter-locks-python-when-an-icon-is-loaded-and-tk-mainloop-is-in-a-thread/79502287#79502287

- get bytes from the canvas: all methods are ugly: stackoverflow.com/questions/9886274/how-can-i-convert-canvas-content-to-an-image

Became notable for performing extremely well on ImageNet starting in 2012.

It is also notable for being one of the first to make successful use of GPU training rather than GPU training.

Object detection model.

You can get some really sweet pre-trained versions of this, typically trained on the COCO dataset.

Deep learning is the name artificial neural networks basically converged to in the 2010s/2020s.

It is a bit of an unfortunate as it suggests something like "deep understanding" and even reminds one of AGI, which it almost certainly will not attain on its own. But at least it sounds good.

Tagged

What is backpropagation really doing? by 3Blue1Brown (2017)

Source. Good hand-wave intuition, but does not describe the exact algorithm.mlcommons.org/en/ Their homepage is not amazingly organized, but it does the job.

Benchmark focused on deep learning. It has two parts:Furthermore, a specific network model is specified for each benchmark in the closed category: so it goes beyond just specifying the dataset.

Results can be seen e.g. at:

Those URLs broke as of 2025 of course, now you have to click on their Tableau down to the 2.1 round and there's no fixed URL for it:

And there are also separate repositories for each:

E.g. on mlcommons.org/en/training-normal-21/ we can see what the the benchmarks are:

| Dataset | Model |

|---|---|

| ImageNet | ResNet |

| KiTS19 | 3D U-Net |

| OpenImages | RetinaNet |

| COCO dataset | Mask R-CNN |

| LibriSpeech | RNN-T |

| Wikipedia | BERT |

| 1TB Clickthrough | DLRM |

| Go | MiniGo |

Instructions at:

Ubuntu 22.10 setup with tiny dummy manually generated ImageNet and run on ONNX:

sudo apt install pybind11-dev

git clone https://github.com/mlcommons/inference

cd inference

git checkout v2.1

virtualenv -p python3 .venv

. .venv/bin/activate

pip install numpy==1.24.2 pycocotools==2.0.6 onnxruntime==1.14.1 opencv-python==4.7.0.72 torch==1.13.1

cd loadgen

CFLAGS="-std=c++14" python setup.py develop

cd -

cd vision/classification_and_detection

python setup.py develop

wget -q https://zenodo.org/record/3157894/files/mobilenet_v1_1.0_224.onnx

export MODEL_DIR="$(pwd)"

export EXTRA_OPS='--time 10 --max-latency 0.2'

tools/make_fake_imagenet.sh

DATA_DIR="$(pwd)/fake_imagenet" ./run_local.sh onnxruntime mobilenet cpu --accuracyLast line of output on P51, which appears to contain the benchmark resultswhere presumably

TestScenario.SingleStream qps=58.85, mean=0.0138, time=0.136, acc=62.500%, queries=8, tiles=50.0:0.0129,80.0:0.0137,90.0:0.0155,95.0:0.0171,99.0:0.0184,99.9:0.0187qps means queries per second, and is the main results we are interested in, the more the better.Running:produces a tiny ImageNet subset with 8 images under

tools/make_fake_imagenet.shfake_imagenet/.fake_imagenet/val_map.txt contains:val/800px-Porsche_991_silver_IAA.jpg 817

val/512px-Cacatua_moluccensis_-Cincinnati_Zoo-8a.jpg 89

val/800px-Sardinian_Warbler.jpg 13

val/800px-7weeks_old.JPG 207

val/800px-20180630_Tesla_Model_S_70D_2015_midnight_blue_left_front.jpg 817

val/800px-Welsh_Springer_Spaniel.jpg 156

val/800px-Jammlich_crop.jpg 233

val/782px-Pumiforme.JPG 285- 817: 'sports car, sport car',

- 89: 'sulphur-crested cockatoo, Kakatoe galerita, Cacatua galerita',

TODO prepare and test on the actual ImageNet validation set, README says:

Prepare the imagenet dataset to come.

Since that one is undocumented, let's try the COCO dataset instead, which uses COCO 2017 and is also a bit smaller. Note that his is not part of MLperf anymore since v2.1, only ImageNet and open images are used. But still:

wget https://zenodo.org/record/4735652/files/ssd_mobilenet_v1_coco_2018_01_28.onnx

DATA_DIR_BASE=/mnt/data/coco

export DATA_DIR="${DATADIR_BASE}/val2017-300"

mkdir -p "$DATA_DIR_BASE"

cd "$DATA_DIR_BASE"

wget http://images.cocodataset.org/zips/val2017.zip

wget http://images.cocodataset.org/annotations/annotations_trainval2017.zip

unzip val2017.zip

unzip annotations_trainval2017.zip

mv annotations val2017

cd -

cd "$(git-toplevel)"

python tools/upscale_coco/upscale_coco.py --inputs "$DATA_DIR_BASE" --outputs "$DATA_DIR" --size 300 300 --format png

cd -Now:fails immediately with:The more plausible looking:first takes a while to preprocess something most likely, which it does only one, and then fails:

./run_local.sh onnxruntime mobilenet cpu --accuracyNo such file or directory: '/path/to/coco/val2017-300/val_map.txt./run_local.sh onnxruntime mobilenet cpu --accuracy --dataset coco-300Traceback (most recent call last):

File "/home/ciro/git/inference/vision/classification_and_detection/python/main.py", line 596, in <module>

main()

File "/home/ciro/git/inference/vision/classification_and_detection/python/main.py", line 468, in main

ds = wanted_dataset(data_path=args.dataset_path,

File "/home/ciro/git/inference/vision/classification_and_detection/python/coco.py", line 115, in __init__

self.label_list = np.array(self.label_list)

ValueError: setting an array element with a sequence. The requested array has an inhomogeneous shape after 2 dimensions. The detected shape was (5000, 2) + inhomogeneous part.TODO!

Let's run on this Imagenet10 subset called Imagenette.

First ensure that you get the dummy test data run working as per MLperf v2.1 ResNet.

Next, in the and then let's create the then back on the mlperf directory we download our model:and finally run!which gives on P51:where The

imagenette2 directory, first let's create a 224x224 scaled version of the inputs as required by the benchmark at mlcommons.org/en/inference-datacenter-21/:#!/usr/bin/env bash

rm -rf val224x224

mkdir -p val224x224

for syndir in val/*: do

syn="$(dirname $syndir)"

for img in "$syndir"/*; do

convert "$img" -resize 224x224 "val224x224/$syn/$(basename "$img")"

done

doneval_map.txt file to match the format expected by MLPerf:#!/usr/bin/env bash

wget https://gist.githubusercontent.com/aaronpolhamus/964a4411c0906315deb9f4a3723aac57/raw/aa66dd9dbf6b56649fa3fab83659b2acbf3cbfd1/map_clsloc.txt

i=0

rm -f val_map.txt

while IFS="" read -r p || [ -n "$p" ]; do

synset="$(printf '%s\n' "$p" | cut -d ' ' -f1)"

if [ -d "val224x224/$synset" ]; then

for f in "val224x224/$synset/"*; do

echo "$f $i" >> val_map.txt

done

fi

i=$((i + 1))

done < <( sort map_clsloc.txt )wget https://zenodo.org/record/4735647/files/resnet50_v1.onnxDATA_DIR=/mnt/sda3/data/imagenet/imagenette2 time ./run_local.sh onnxruntime resnet50 cpu --accuracyTestScenario.SingleStream qps=164.06, mean=0.0267, time=23.924, acc=87.134%, queries=3925, tiles=50.0:0.0264,80.0:0.0275,90.0:0.0287,95.0:0.0306,99.0:0.0401,99.9:0.0464qps presumably means "querries per second". And the time results:446.78user 33.97system 2:47.51elapsed 286%CPU (0avgtext+0avgdata 964728maxresident)ktime=23.924 is much smaller than the time executable because of some lengthy pre-loading (TODO not sure what that means) that gets done every time:INFO:imagenet:loaded 3925 images, cache=0, took=52.6sec

INFO:main:starting TestScenario.SingleStreamLet's try on the GPU now:which gives:TODO lower

DATA_DIR=/mnt/sda3/data/imagenet/imagenette2 time ./run_local.sh onnxruntime resnet50 gpu --accuracyTestScenario.SingleStream qps=130.91, mean=0.0287, time=29.983, acc=90.395%, queries=3925, tiles=50.0:0.0265,80.0:0.0285,90.0:0.0405,95.0:0.0425,99.0:0.0490,99.9:0.0512

455.00user 4.96system 1:59.43elapsed 385%CPU (0avgtext+0avgdata 975080maxresident)kqps on GPU!Notably, convolution can be implemented in terms of GEMM:

The most important thing this project provides appears to be the

.onnx file format, which represents ANN models, pre-trained or not.Deep learning frameworks can then output such

.onnx files for interchangeability and serialization.Some examples:

- activatedgeek/LeNet-5 produces a trained

.onnxfrom PyTorch - MLperf v2.1 ResNet can use

.onnxas a pre-trained model

The cool thing is that ONNX can then run inference in an uniform manner on a variety of devices without installing the deep learning framework used for. It's a bit like having a kind of portable executable. Neat.

ONNX visualizer.

Matrix multiplication example.

Fundamental since deep learning is mostly matrix multiplication.

NumPy does not automatically use the GPU for it: stackoverflow.com/questions/49605231/does-numpy-automatically-detect-and-use-gpu, and PyTorch is one of the most notable compatible implementations, as it uses the same memory structure as NumPy arrays.

Sample runs on P51 to observe the GPU speedup:

$ time ./matmul.py g 10000 1000 10000 100

real 0m22.980s

user 0m22.679s

sys 0m1.129s

$ time ./matmul.py c 10000 1000 10000 100

real 1m9.924s

user 4m16.213s

sys 0m17.293spython/pytorch/matmul.py

#!/usr/bin/env python3

# https://cirosantilli.com/_file/python/pytorch/matmul.py

import sys

import torch

print(torch.cuda.is_available())

if len(sys.argv) > 1:

gpu = sys.argv[1] == 'g'

else:

gpu = False

if len(sys.argv) > 2:

n = int(sys.argv[2])

else:

n = 5

if len(sys.argv) > 3:

m = int(sys.argv[3])

else:

m = 5

if len(sys.argv) > 4:

o = int(sys.argv[4])

else:

o = 10

if len(sys.argv) > 5:

repeat = int(sys.argv[5])

else:

repeat = 10

t1 = torch.ones((n, m))

t2 = torch.ones((m, o))

t3 = torch.zeros(n, o)

if gpu:

t1 = t1.to('cuda')

t2 = t2.to('cuda')

t3 = t3.to('cuda')

for i in range(repeat):

t3 += t1 @ t2

print(t3)

This section lists specific models that have been implemented in PyTorch.

Contains several computer vision models, e.g. ResNet, all of them including pre-trained versions on some dataset, which is quite sweet.

Documentation: pytorch.org/vision/stable/index.html

pytorch.org/vision/0.13/models.html has a minimal runnable example adapted to python/pytorch/resnet_demo.py.

That example uses a ResNet pre-trained on the COCO dataset to do some inference, tested on Ubuntu 22.10:This first downloads the model, which is currently 167 MB.

cd python/pytorch

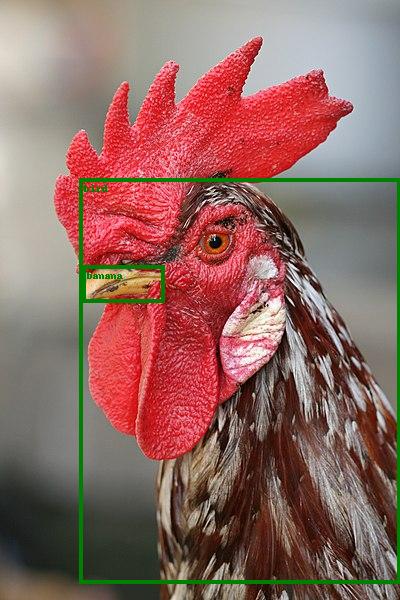

wget -O resnet_demo_in.jpg https://upload.wikimedia.org/wikipedia/commons/thumb/6/60/Rooster_portrait2.jpg/400px-Rooster_portrait2.jpg

./resnet_demo.py resnet_demo_in.jpg resnet_demo_out.jpgWe know it is COCO because of the docs: pytorch.org/vision/0.13/models/generated/torchvision.models.detection.fasterrcnn_resnet50_fpn_v2.html which explains that is an alias for:

FasterRCNN_ResNet50_FPN_V2_Weights.DEFAULTFasterRCNN_ResNet50_FPN_V2_Weights.COCO_V1The runtime is relatively slow on P51, about 4.7s.

After it finishes, the program prints the recognized classes:so we get the expected

['bird', 'banana']bird, but also the more intriguing banana.By looking at the output image with bounding boxes, we understand where the banana came from!

python/pytorch/resnet_demo_out.jpg

. The beak was of course a banana, not a beak!Version of TensorFlow with a Cirq backend that can run in either quantum computers or classical computer simulations, with the goal of potentially speeding up deep learning applications on a quantum computer some day.

Open source software reviews:

Open source software reviews:

Is there nothing standardized besides just raw images?

E.g. www.nist.gov/system/files/documents/2021/02/25/ansi-nist_2007_griffin-face-std-m1.pdf from 2005 by NIST says:so comparing it to fingerprint file formats such as ISO 19794-2. Sad!

Specify face images because there is no agreement on a standard face recognition template - Unlike finger minutiae ...

Given multiple images, decide how many people show up these images and when each person shows up.

One particular case of this is for videos, where you also have a timestamp for each image, and way more data.

Bibliography:

Tagged

OK, now we're talking, two liner and you get a window showing bounding box object detection from your webcam feed!The accuracy is crap for anything but people. But still. Well done. Tested on Ubuntu 22.10, P51.

python -m pip install -U yolov5==7.0.9

yolov5 detect --source 0fcakyon/yolov5-pip webcam object detection demo by Ciro Santilli (2023)

Source. 70,000 28x28 grayscale (1 byte per pixel) images of hand-written digits 0-9, i.e. 10 categories. 60k are considered training data, 10k are considered for test data.

This is THE "OG" computer vision dataset.

Playing with it is the de-facto computer vision hello world.

It was on this dataset that Yann LeCun made great progress with the LeNet model. Running LeNet on MNIST has to be the most classic computer vision thing ever. See e.g. activatedgeek/LeNet-5 for a minimal and modern PyTorch educational implementation.

But it is important to note that as of the 2010's, the benchmark had become too easy for many applications. It is perhaps fair to say that the next big dataset revolution of the same importance was with ImageNet.

The dataset could be downloaded from yann.lecun.com/exdb/mnist/ but as of March 2025 it was down and seems to have broken from time to time randomly, so Wayback Machine to the rescue:but doing so is kind of pointless as both files use some crazy single-file custom binary format to store all images and labels. OMG!

wget \

https://web.archive.org/web/20120828222752/http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz \

https://web.archive.org/web/20120828182504/http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz \

https://web.archive.org/web/20240323235739/http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz \

https://web.archive.org/web/20240328174015/http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

OK-ish data explorer: knowyourdata-tfds.withgoogle.com/#tab=STATS&dataset=mnist

MNIST image 3 of a '1'

. Same style as MNIST: 28x28 grayscale images, but with clothes rather than hand written digits.

It was designed to be much harder than MNIST, and more representative of modern applications, while still retaining the low resolution of MNIST for simplicity of training.

60,000 tiny 32x32 color images in 10 different classes: airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

TODO release date.

This dataset can be thought of as an intermediate between the simplicity of MNIST, and a more full blown ImageNet.

TODO where to find it: www.kaggle.com/general/50987

Cited on original Generative adversarial network paper: proceedings.neurips.cc/paper_files/paper/2014/file/5ca3e9b122f61f8f06494c97b1afccf3-Paper.pdf

14 million images with more than 20k categories, typically denoting prominent objects in the image, either common daily objects, or a wild range of animals. About 1 million of them also have bounding boxes for the objects. The images have different sizes, they are not all standardized to a single size like MNIST[ref].

Each image appears to have a single label associated to it. Care must have been taken somehow with categories, since some images contain severl possible objects, e.g. a person and some object.

Official project page: www.image-net.org/

The data license is restrictive and forbids commercial usage: www.image-net.org/download.php. Also as a result you have to login to download the dataset. Super annoying.

How to visualize: datascience.stackexchange.com/questions/111756/where-can-i-view-the-imagenet-classes-as-a-hierarchy-on-wordnet

The categories are all part of WordNet, which means that there are several parent/child categories such as dog vs type of dog available. ImageNet1k only appears to have leaf nodes however (i.e. no "dog" label, just specific types of dog).

Tagged

Subset generators:

- github.com/mf1024/ImageNet-datasets-downloader generates on download, very good. As per github.com/mf1024/ImageNet-Datasets-Downloader/issues/14 counts go over the limit due to bad multithreading. Also unfortunately it does not start with a subset of 1k.

- github.com/BenediktAlkin/ImageNetSubsetGenerator

Unfortunately, since ImageNet is a closed standard no one can upload such pre-made subsets, forcing everybody to download the full dataset, in ImageNet1k, which is huge!

An imagenet10 subset by fast.ai.

Size of full sized image version: 1.5 GB.

Subset of ImageNet. About 167.62 GB in size according to www.kaggle.com/competitions/imagenet-object-localization-challenge/data.

Contains 1,281,167 images and exactly 1k categories which is why this dataset is also known as ImageNet1k: datascience.stackexchange.com/questions/47458/what-is-the-difference-between-imagenet-and-imagenet1k-how-to-download-it

www.kaggle.com/competitions/imagenet-object-localization-challenge/overview clarifies a bit further how the categories are inter-related according to WordNet relationships:

The 1000 object categories contain both internal nodes and leaf nodes of ImageNet, but do not overlap with each other.

image-net.org/challenges/LSVRC/2012/browse-synsets.php lists all 1k labels with their WordNet IDs.There is a bug on that page however towards the middle:and there is one missing label if we ignore that dummy

n02119789: kit fox, Vulpes macrotis

n02100735: English setter

n02096294: Australian terriern03255030: dumbbell

href="ht:

n02102040: English springer, English springer spanielhref= line. A thinkg of beauty!Also the lines are not sorted by synset, if we do then the first three lines are:

n01440764: tench, Tinca tinca

n01443537: goldfish, Carassius auratus

n01484850: great white shark, white shark, man-eater, man-eating shark, Carcharodon carchariasgist.github.com/aaronpolhamus/964a4411c0906315deb9f4a3723aac57 has lines of type:therefore numbered on the exact same order as image-net.org/challenges/LSVRC/2012/browse-synsets.php

n02119789 1 kit_fox

n02100735 2 English_setter

n02110185 3 Siberian_huskygist.github.com/yrevar/942d3a0ac09ec9e5eb3a lists all 1k labels as a plaintext file with their benchmark IDs.therefore numbered on sorted order of image-net.org/challenges/LSVRC/2012/browse-synsets.php

{0: 'tench, Tinca tinca',

1: 'goldfish, Carassius auratus',

2: 'great white shark, white shark, man-eater, man-eating shark, Carcharodon carcharias',The official line numbering in-benchmark-data can be seen at

LOC_synset_mapping.txt, e.g. www.kaggle.com/competitions/imagenet-object-localization-challenge/data?select=LOC_synset_mapping.txtn01440764 tench, Tinca tinca

n01443537 goldfish, Carassius auratus

n01484850 great white shark, white shark, man-eater, man-eating shark, Carcharodon carchariashuggingface.co/datasets/imagenet-1k also has some useful metrics on the split:

- train: 1,281,167 images, 145.7 GB zipped

- validation: 50,000 images, 6.67 GB zipped

- test: 100,000 images, 13.5 GB zipped

The official page: www.image-net.org/challenges/LSVRC/index.php points to a download link on Kaggle: www.kaggle.com/competitions/imagenet-object-localization-challenge/data Kaggle says that the size is 167.62 GB!

To download from Kaggle, create an API token on kaggle.com, which downloads a The download speed is wildly server/limited and take A LOT of hours. Also, the tool does not seem able to pick up where you stopped last time.

kaggle.json file then:mkdir -p ~/.kaggle

mv ~/down/kaggle.json ~/.kaggle

python3 -m pip install kaggle

kaggle competitions download -c imagenet-object-localization-challengeAnother download location appears to be: huggingface.co/datasets/imagenet-1k on Hugging Face, but you have to login due to their license terms. Once you login you have a very basic data explorer available: huggingface.co/datasets/imagenet-1k/viewer/default/train.

Bibliography:

From cocodataset.org/:

- 330K images (>200K labeled)

- 1.5 million object instances

- 80 object categories

- 91 stuff categories

- 5 captions per image. A caption is a short textual description of the image.

So they have relatively few object labels, but their focus seems to be putting a bunch of objects on the same image. E.g. they have 13 cat plus pizza photos. Searching for such weird combinations is kind of fun.

Their official dataset explorer is actually good: cocodataset.org/#explore

And the objects don't just have bounding boxes, but detailed polygons.

Also, images have captions describing the relation between objects:Epic.

a black and white cat standing on a table next to a pizza.

This dataset is kind of cool.

This is the one used on MLperf v2.1 ResNet, likely one of the most popular choices out there.

2017 challenge subset:

- train: 118k images, 18GB

- validation: 5k images, 1GB

- test: 41k images, 6GB

TODO vs COCO dataset.

As of v7:

- ~9M images

- 600 object classes

- bounding boxes

- visual relatoinships are really hard: storage.googleapis.com/openimages/web/factsfigures_v7.html#visual-relationships e.g. "person kicking ball": storage.googleapis.com/openimages/web/visualizer/index.html?type=relationships&set=train&c=kick

- google.github.io/localized-narratives/ localized narratives is ludicrous, you can actually hear the (Indian women mostly) annotators describing the image while hovering their mouses to point what they are talking about). They are clearly bored out of their minds the poor people!

This section is about companies that primarily specialize in machine learning.

The term "machine learning company" is perhaps not great as it could be argued that any of the Big tech are leaders and sometimes, especially in the case of Google, has a main product that is arguably a form of machine learning.

Most of the companies in this section likely going to be from the AI boom era.

Tagged

reflection.ai/

Building Frontier Open Intelligence

Their one letter name is extremelly annyoing! The previous "Holistic AI" was so much saner.

They claim to want to achieve AGI, but it is not clear if they are just going to try larger LLMs or if they have something actual in mind.

Their main initial product thing seems to be browser automation which is cool.

Interesting website, hosts mostly:

- datasets

- ANN models

- some live running demos called "apps": e.g. huggingface.co/spaces/ronvolutional/ai-pokemon-card

What's the point of this website vs GitHub? www.reddit.com/r/MLQuestions/comments/ylf4be/whats_the_deal_with_hugging_faces_popularity/

Tagged

Many people believe that knowledge graphs are a key element of AGI: Knowledge graph as a component of AGI.

Bibligraphy:

- www.knowledgegraph.tech/ The Knowledge graph Conference

Related:

- twitter.com/yoheinakajima/status/1759107727463518702 "smallest RAG test possible of an indirect relationship on a knowledge graph"

- www.quora.com/Do-knowledge-graphs-bases-have-a-place-in-the-pursuit-of-artificial-general-intelligence-AGI-or-can-their-features-be-better-represented-in-a-learning-based-system "Do knowledge graphs / bases have a place in the pursuit of artificial general intelligence (AGI), or can their features be better represented in a learning-based system?"

- Mentions the interesting sounding "Attempto" project:

This is one of those idealistic W3C specifications with super messy implementations all over.

Reasonable introduction: www.w3.org/TR/owl2-primer/

Example: rdf/vcard.ttl.

Implemented by:

In this tutorial, we will use the Jena SPARQL hello world as a starting point. Tested on Apache Jena 4.10.0.

Basic query on rdf/vcard.ttl RDF Turtle data to find the person with full name "John Smith":Output:

sparql --data=rdf/vcard.ttl --query=<( printf '%s\n' 'SELECT ?x WHERE { ?x <http://www.w3.org/2001/vcard-rdf/3.0#FN> "John Smith" }')---------------------------------

| x |

=================================

| <http://somewhere/JohnSmith/> |

---------------------------------To avoid writing Output:

http://www.w3.org/2001/vcard-rdf/3.0# a billion times as queries grow larger, we can use the PREFIX syntax:sparql --data=rdf/vcard.ttl --query=<( printf '%s\n' '

PREFIX vc: <http://www.w3.org/2001/vcard-rdf/3.0#>

SELECT ?x

WHERE { ?x vc:FN "John Smith" }

')---------------------------------

| x |

=================================

| <http://somewhere/JohnSmith/> |

---------------------------------Bibliography:

- UniProt contains some amazing examples runnable on their servers: sparql.uniprot.org/.well-known/sparql-examples/

Bibliography:

The CLI tools don't appear to be packaged for Ubuntu 23.10? Annoying... There is a package

libapache-jena-java but it doesn't contain any binaries, only Java library files.To run the CLI tools easily we can download the prebuilt:and we can confirm it works with:which outputs:

sudo apt install openjdk-22-jre

wget https://dlcdn.apache.org/jena/binaries/apache-jena-4.10.0.zip

unzip apache-jena-4.10.0.zip

cd apache-jena-4.10.0

export JENA_HOME="$(pwd)"

export PATH="$PATH:$(pwd)/bin"sparql -versionApache Jena version 4.10.0If your Java is too old then then running

sparql with the prebuilts fails with:Error: A JNI error has occurred, please check your installation and try again

Exception in thread "main" java.lang.UnsupportedClassVersionError: arq/sparql has been compiled by a more recent version of the Java Runtime (class file version 55.0), this version of the Java Runtime only recognizes class file versions up to 52.0

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:756)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:473)

at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:621)Build from source is likely something like:TODO test it.

sudo apt install maven openjdk-22-jdk

git clone https://github.com/apache/jena --branch jena-4.10.0 --depth 1

cd jena

mvn clean installIf you make the mistake of trying to run the source tree without build:it fails with:as per: users.jena.apache.narkive.com/T5TaEszT/sparql-tutorial-querying-datasets-error-unrecognized-option-graph

git clone https://github.com/apache/jena --branch jena-4.10.0 --depth 1

cd jena

export JENA_HOME="$(pwd)"

export PATH="$PATH:$(pwd)/apache-jena/bin"Error: Could not find or load main class arq.sparqlThey have a tutorial at: jena.apache.org/tutorials/sparql.html

Once you've done the Apache Jena CLI tools setup we can query all users with Full Name (FN) "John Smith" directly fom the rdf/vcard.ttl Turtle RDF file with the rdf/vcard.rq SPARQL query:and that outputs:

sparql --data=rdf/vcard.ttl --query=rdf/vcard.rq---------------------------------

| x |

=================================

| <http://somewhere/JohnSmith/> |

---------------------------------Bibliography:

Hello world: stackoverflow.com/questions/16829351/is-there-a-hello-world-example-for-sparql-with-rdflib

Many good mentions at: Obituary for the greatest monument to logical AGI by Liu Yuxi. They were largely funded by the

It appears to only extract structured data from Wikipedia, not natural language, so it is kind of basic then.

MediaWiki extension that does this:It diddn't take off and is not in main Wikipedia. Kind of cool though.

... the capital city is [[Has capital::Berlin]] ...Groups concepts by hyponymy and hypernymy and meronymy and holonymy. That actually makes a lot of sense! TODO: is there a clear separation between hyponymy and meronymy?

Browse: wordnetweb.princeton.edu/perl/webwn Appears dead as of 2025 lol.

The online version of WordNet has been deprecated and is no longer available.

Does not contain intermediat scientific terms, only very common ones, e.g. no mention, of "Josephson effect", "photoelectric effect"

A pair of Austrailan deep learning training provider/consuntants that have produced a lot of good free learning materials:Authors:

- twitter.com/jeremyphoward Jeremy Howard

- twitter.com/math_rachel Rachel Thomas

The approach of this channel of exposing recent research papers is a "honking good idea" that should be taken to other areas beyond just machine learning. It takes a very direct stab at the missing link between basic and advanced!

Ciro Santilli

Ciro Santilli OurBigBook.com

OurBigBook.com